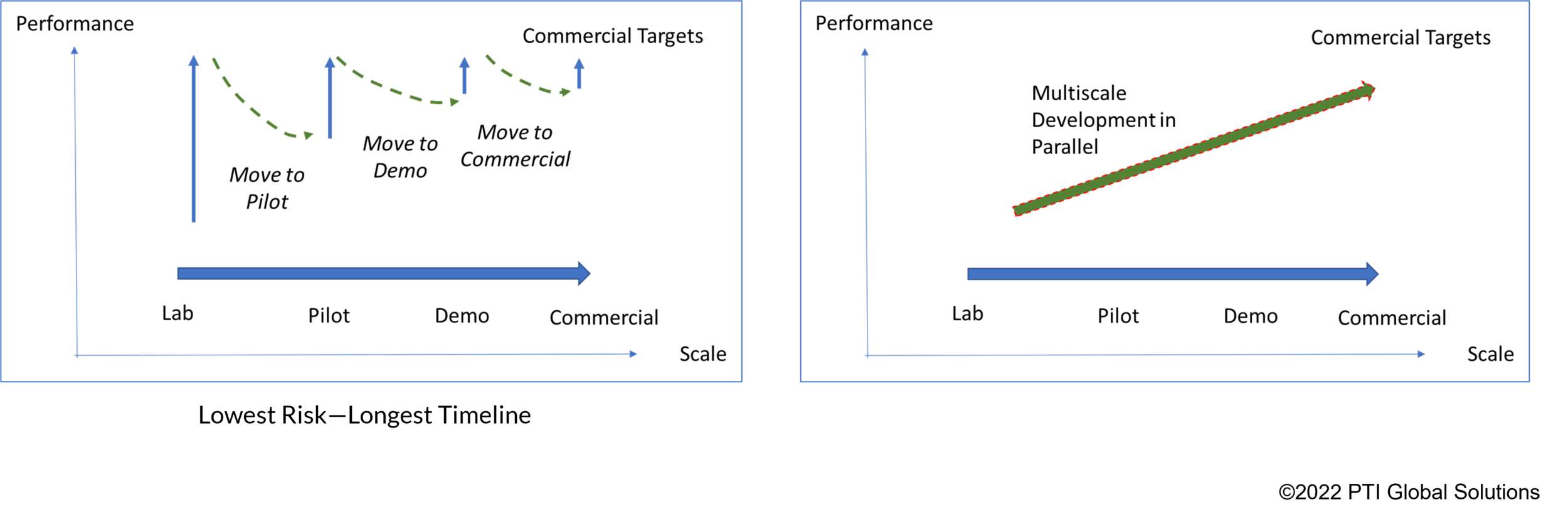

The pilot phase is a crucial step in the commercialization of any new process technology, particularly a sustainable process technology. It serves as the first significant opportunity to de-risk the science, generate engineering data for scale-up, and produce product samples for market development. However, because a fully developed pilot plant may cost millions of dollars and take months or even more than a year to design and build, a natural tension exists--how do we design and execute a pilot campaign that can meet our objectives, and lay the groundwork for successful commercialization, while managing cost and minimizing time to commercialization?

Best practices for piloting a sustainable process technology include setting clear objectives, defining the scope, and effective execution.

Setting Clear Objectives

Piloting a new sustainable product or process technology is not just about running experiments, it is about de-risking and generating value. Common objectives include:

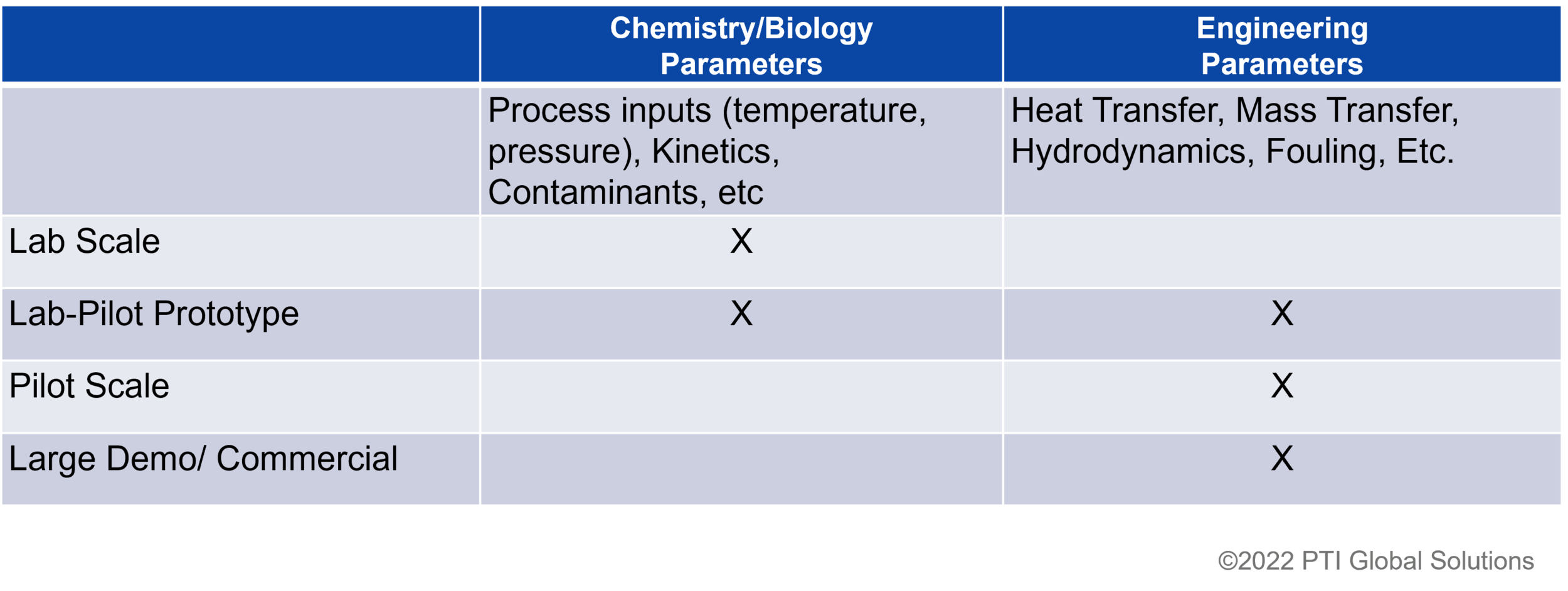

Generating Reliable Scale-Up Data: Building confidence in your process as you transition from lab to pilot scale.

Reducing and Mitigating Risk: Identifying and addressing technical challenges to mitigate critical risk areas.

Producing Market-Ready Samples: Delivering product samples for customer and market development, and to generate understanding of the impact of process parameters on product properties.

Each of these objectives is critical to the commercialization roadmap. By defining them with clarity and precision, you ensure that every stage of the pilot phase contributes to broader business and technical goals.

Defining the Scope:

The next step involves developing the scope for a pilot campaign. A key early decision is whether the pilot campaign (or at least early phases) can be outsourced to one or more third party contract manufacturing or piloting organizations with the capabilities required for the new technology, such as high pressure reaction equipment, fermentation, or specialized separations.

Defining the scope is also critical for any new assets that are required—ensuring that objectives can be met while balancing the capital cost and construction time. Adopting a staged approach—starting with initial goals and adding capabilities and equipment in later phases—can be a good approach.

Finally, while I am a big believer that the primary product of a pilot plant is data, it’s helpful at this point to critically assess the controls and analytical requirements for a pilot campaign. It is tempting to envision a fully automated pilot plant that can be run remotely and generate data with online analyzers throughout. The costs of doing this can really add up, so it is useful to walk through a scoping exercise in the planning phases to think through what is critical at this point vs ‘nice to have’. A staged approach again can be helpful—perhaps early phases of a pilot project have minimal automation with the ability to take samples for offline analysis, with future stages adding additional instrumentation and controls.

Effective Execution: Turning Plans into Action

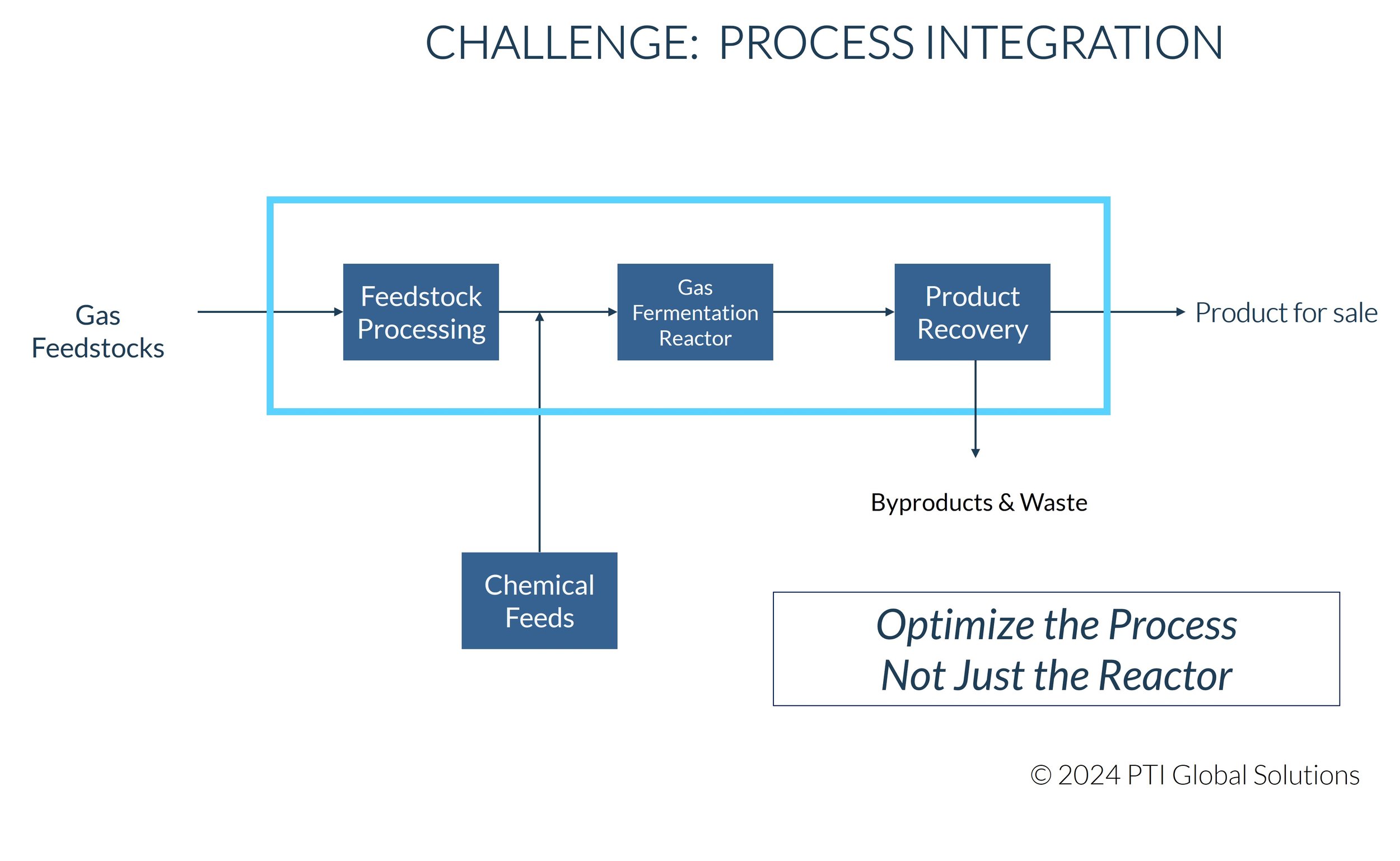

With objectives and scope established, the focus shifts to execution. Building the pilot plant is a multidisciplinary challenge, involving the design and installation of equipment like feedstock handling systems, reactors, and product separation and purification units. Scaling up from the lab isn’t just about going bigger; this involves translating small-scale lab processes into larger, operational systems suitable for pilot plant operation.

A blended approach often works best—leveraging internal expertise while partnering with experienced third-party engineering, procurement, and construction (EPC) firms.

Finally, successful commissioning, startup, and operation are the culmination of this planning and execution. These stages validate that the pilot plant functions as intended, providing confidence for further scale-up and commercialization.

Process Safety

The pilot plant is a working process plant and should be treated as such. Best practices for process safety must be followed throughout planning, design, and execution, including early-stage Hazard Identification (HAZID) methods, Hazard and Operability Studies (HAZOP), pre-startup safety reviews (PSSR) and management of change procedures (MOCs). For the HAZID and HAZOP studies, it is best to have an independent facilitator who has no prior involvement with the project. For a smaller company this means investing time and money in hiring an external facilitator. For larger companies, this can mean having a roster of trained facilitators who can be called on to facilitate the HAZOP study. This one paragraph cannot do this topic justice. Several useful resources include:

· https://www.aiche.org/sites/default/files/docs/summaries/rbps.pdf

· https://www.aiche.org/resources/publications/books/guidelines-risk-based-process-safety

To Pilot or Not?

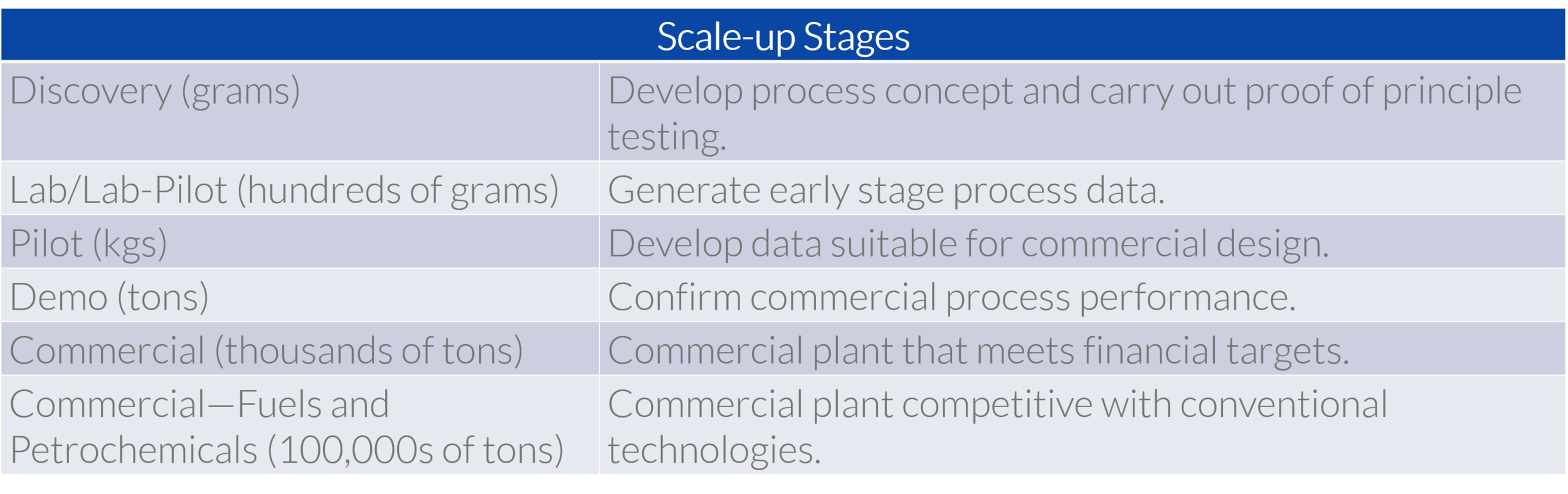

A controversial opinion in some circles is that it may be possible to ‘skip’ the pilot stage, with the key benefits being a savings in the time and cost to get to commercialization. I’ve been part of a number of successful scaleups that have not had dedicated pilot stages. These have included:

· A new type of distillation column, where we used extensive modeling studies and engineering assessments that convinced us we could move directly to commercial design.

· New specialty chemical products, where well planned plant trials (in existing assets) substituted for a pilot phase.

· New thermochemical catalysts where experience with packed bed reactor systems allowed us to scale more than 1000X from a lab-pilot to commercialization.

The decision to bypass the piloting stage should not be taken lightly—don’t just cross your fingers and hope for the best! A well thought out risk assessment will identify any risks, such as safety, technical, or market risks that can and should be mitigated with a pilot phase. In all the cases described above, we determined that these risk scores in these areas were sufficiently low enough to move forward without the pilot phase.

Laying the Groundwork for Commercial Success

In conclusion, the pilot phase is much more than a technical exercise. It is a critical step in the path to commercialization. By setting clear objectives, defining a precise scope, and executing with excellence, companies can navigate this phase with confidence and poise.

The message is clear: piloting is not a hurdle but an opportunity—a chance to innovate, optimize, and set the stage for future achievements.